Storage IOPS Performance Benchmarks

Testing Methodology

The random read and write IOPS are measured in a VM running Linux or Windows. The IOPS measured in the VM indicates the maximum IOPS that could be consumed by the workloads running in the same VM. The measured IOPS is compared with the performance numbers published by the vendor of physical NVMe drive to see how much raw performance the VM could consume.

Testing Tool

FIO

Supported OS: Linux and Windows

FIO is an industry standard benchmark for measuring disk I/O performance. Fio spawns a number of threads or processes doing a particular type of I/O action as specified by the user. We include it here as a baseline measurement on all infrastructure to compare raw disk performance that any application can potentially achieve.

VDBench

Supported OS: Linux and Windows

VDBench is a command line utility specifically created to help engineers and customers generate disk I/O workloads to be used for validating storage performance and storage data integrity.

Test Setup

FIO - Ubuntu 18.04

Stand-alone Host

The FIO could be running as a stand-alone tool on the host where the IO workload is generated against the local storage, i.e. the storage to be tested.

- Create a Ubuntu VM with three disks attached, where one disk is the boot drive and the other two disks would be used for storage performance test. A tutorial for the VM setup on the Sunlight platform is available here.

- Prepare the VM to have proper irq balance setting. (A script is available here).

- Download the FIO tool (a binary executive file is available for download here).

- Provide an FIO configuration file to specify the relevant parameters, including the disk to test, I/O action (rw=randread for random read or rw=randwrite for random write), block size and iodepth. An example for Ubuntu to test the 2nd and 3rd drives simultaneously is provided below and also available for download here.

[global]

norandommap

gtod_reduce=1

group_reporting=1

ioengine=libaio

time_based=1

rw=randread

bs=4k

direct=1

ramp_time=5

runtime=30

iodepth_batch=32

iodepth_batch_complete=8

iodepth_low=16

iodepth=32

loops=3

[job1]

filename=/dev/xvdb

[job2]

filename=/dev/xvdc

- Run the FIO test with a configuration file and the numjobs parameter specifying the number the cores used for the test.

sudo ./fio-3.2 randread.fio --numjobs=16

The same fio configuration file is used when comparing a VM running on different environment. Make sure to change the configuration file to access the correct block device name that you wish to test, e.g. /dev/sda or /dev/xvda.

Multiple Hosts in Parallel

In addition to the stand-alone test, the FIO could also be running as backend servers across different hosts to generate the IO workload in parallel on multiple hosts.

- On the host where the IO workload is generated against the local storage, start a FIO backend server, which would be listening on the request.

sudo ./fio-3.2 --server

-

Start the FIO backend server on all the hosts where the local storage would be tested.

-

On the host where the FIO would be running to control the IO workload on all backend servers and collect the test results, start a FIO by specifying the IP address or hostname of the host running a FIO backend server and the configuration file located at each backend server. The configuration file should include all the parameters as the example below, which is the same as the above configuration file used for stand-alone test but with the extra numjobs parameter included.

[global]

norandommap

gtod_reduce=1

group_reporting=1

ioengine=libaio

time_based=1

rw=randread

bs=4k

direct=1

ramp_time=5

runtime=30

iodepth_batch=32

iodepth_batch_complete=8

iodepth_low=16

iodepth=32

loops=3

[job1]

filename=/dev/xvdb

numjobs=8

- Multiple backend servers could be connected to run the IO workloads in parallel. The following example is to start a FIO test for two hosts.

sudo ./fio-3.2 --client=192.168.1.2 --remote-config=randread.fio --client=192.168.1.3 --remote-config=randread.fio

- The test results from each individual host and the aggregated result from all hosts would be provided.

FIO - CentOS 7 and RHEL 7

The procedure to run FIO tool on CentOS 7 or RHEL 7 would need an extra step in addition to the steps mentioned above for Ubuntu 18.04.

- Install the Enterprise Linux packages as the stock kernel does not have the support of multi-queue blkfront.

sudo rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

sudo yum -y install https://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

sudo yum --enablerepo=elrepo-kernel install kernel-ml

sudo grub2-set-default 0

sudo reboot

- Prepare the VM to have proper irq balance setting. (A script is available here).

FIO - Windows Server 2016

- Create a Windows VM with three disks attached, where one disk is the boot drive and the other two disks would be used for storage performance test. A tutorial for the VM setup on the Sunlight platform is available here.

- Download the FIO tool (an Windows installer is available for download from Sunlight or a third party.

- Run the installer to install the FIO program to the Windows VM.

- Provide an FIO configuration file to specify the relevant parameters, including the disk to test, I/O action (rw=randread for random read or rw=randwrite for random write), block size and iodepth. An example for Windows to test the 2nd and 3rd drives simultaneously is provided below and also available for download here.

[global]

norandommap

gtod_reduce=1

group_reporting=1

ioengine=windowsaio

time_based=1

rw=randread

bs=4k

direct=1

ramp_time=5

runtime=30

iodepth_batch=32

iodepth_batch_complete=8

iodepth_low=16

iodepth=32

loops=3

thread=1

[job1]

filename=\\.\PhysicalDrive1

[job2]

filename=\\.\PhysicalDrive2

- Run the FIO test with a configuration file and the numjobs parameter specifying the number the cores used for the test.

fio win_randread.fio --numjobs=4

Results

FIO - IOPS (4K) from VMs running on Sunlight on-prem

| Platform | OS | Number of CPU cores | Disk size (GB) | Random Read IOPS (k) | Random Write IOPS (k) |

|---|---|---|---|---|---|

| Intel BP | Ubuntu 18.04 | 16 | 2 x 80 | 750 | 700 |

| Intel BP | Windows Server 2016 | 16 | 2 x 80 | 90 | 100 |

| Altos R385 F4 | Ubuntu 18.04 | 8 | 1 x 80 | 804 | 677 |

| Altos R385 F5 | Ubuntu 18.04 | 8 | 1 x 80 | 889 | 635 |

| Altos R385 F5 | Ubuntu 18.04 | 16 | 2 x 80 | 1040 | - |

| Altos R389 F4 | Ubuntu 18.04 | 8 | 1 x 80 | 751 | 630 |

| Altos R389 F4 | Ubuntu 18.04 | 8 | 3 x 80 | 1074 | - |

| Altos R365 F5 | Ubuntu 18.04 | 4 | 1 x 80 | 515 | 594 |

| Altos R365 F5 | Ubuntu 18.04 | 8 | 2 x 80 | 995 | 1015 |

FIO - IOPS (4K) from VMs running on Sunlight at the Edge

| Platform | OS | Number of CPU cores | Disk size (GB) | Random Read IOPS (k) | Random Write IOPS (k) |

|---|---|---|---|---|---|

| Altos R320 F5 | Ubuntu 18.04 | 6 | 1 x 40 | 547 | 212 |

| Lenovo ThinkSystem SE350 | Ubuntu 18.04 | 6 | 1 x 40 | 408 | 224 |

| Lenovo ThinkSystem SE350 | Ubuntu 18.04 | 16 | 2 x 40 | 854 | 410 |

| Lenovo ThinkSystem SE350 | Ubuntu 18.04 | 16 | 3 x 40 | 993 | 436 |

| LanternEdge HarshPro™ IP66 Server | Ubuntu 18.04 | 4 | 2 x 40 | 342 | 290 |

| LanternEdge HarshPro™ IP66 Server | Ubuntu 18.04 | 8 | 3 x 40 | 557 | 422 |

| LanternEdge HarshPro™ IP66 Server | Ubuntu 18.04 | 12 | 3 x 40 | 935 | 499 |

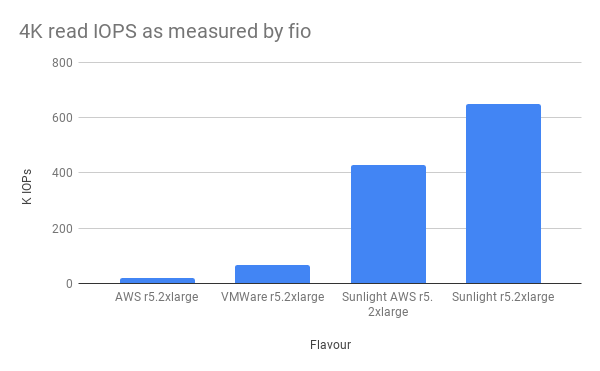

FIO - IOPS (4K) from Ubuntu on different platforms

| Flavour | Read IOPs |

|---|---|

| AWS r5.2xlarge + 50K reserved iops | 18.7 |

| Sunlight AWS r5.2xlarge | 428 |

| Sunlight r5.2xlarge | 651 |